I’m not sure how to interpret this data…

I wonder what they meant when they said that.

I think I’m seeing some patterns here, but…

… I wonder if other people would draw the same conclusions.

Am I focusing on the right things…

…as I analyze this information?

While we won’t nerd out on what statistical software might be best equipped for this work, we will outline how to make sense of the rich insight you gained from young people, and how to even include them in the interpretation process!

This chapter brings us to the key step of making meaning from all the data and input you’ve collected from youth! We’ll focus specifically on how to involve young people in this process of meaning-making and analysis, because that’s kinda our jam. You can also go sit alone in a room with your statistical software if you like that sort of thing… we just won’t cover that here. 🙂

Coding & thematic analysis

Involving youth in the analysis.

Sometimes you may want to involve youth in actually analyzing the raw data from your project and helping to identify themes. This can be a really powerful way to approach inductive coding, as young people’s “read” of the data might be very different from your team’s perspective on that same data. If you choose to do this, there are a lot of logistical things to think about, including ensuring participant confidentiality, providing sufficient training for your youth co-researchers to be able to conduct analyses, and ensuring that the youth are receiving benefits from participating in this analytical work, not just doing “grunt work” for your team. For a great overview of these and other issues, we suggest you check out this article by Clark et. al. (2022).

Example from Character Lab

One of our school partners had a group of 12th grade students who wanted to conduct research in their district about various issues related to student diversity and inclusion. They reached out to us to see if any of the researchers in our network would be interested and willing to help provide them with the methodological support to do this research, because they wanted the results to be as useful as possible.

We found a research partner who was willing to engage with them, and introduced the researcher to the teacher who was working with the students so they could talk about logistics, timelines, and the students’ existing knowledge and interests. Then the researcher joined the class via Zoom to provide support to students as they designed surveys. Once the surveys were developed, the students worked with their district to distribute them and collect the data. Once the data is collected, the researcher and her team will help support the students in data analysis and inference. This partnership lets the students set the research agenda and focus on asking the questions they care most about, making meaning of the results, and advocating for change based on the data, by providing them with the technical support they need to do the work of research well.

Co-Interpretation of the findings

Loop youth back in after your initial analysis.

Sometimes your team will choose to do initial coding and thematic analysis of the data on your own, and then bring young people back in to help you further interpret your emerging findings. We call this co-interpretation.

Co-interpretation of findings starts with deciding which findings to present to youth. You are likely to have many rich findings from doing research with young people, and it might not be realistic or necessary to bring all of them to your youth partners. To decide which ones to bring to youth advisors, you may want to focus on this question: What findings are surprising (or unsurprising), curious, notable, feel incomplete, or don’t sit right with you? These are often ripe for youth co-interpretation.

Example from Center for Digital Thriving

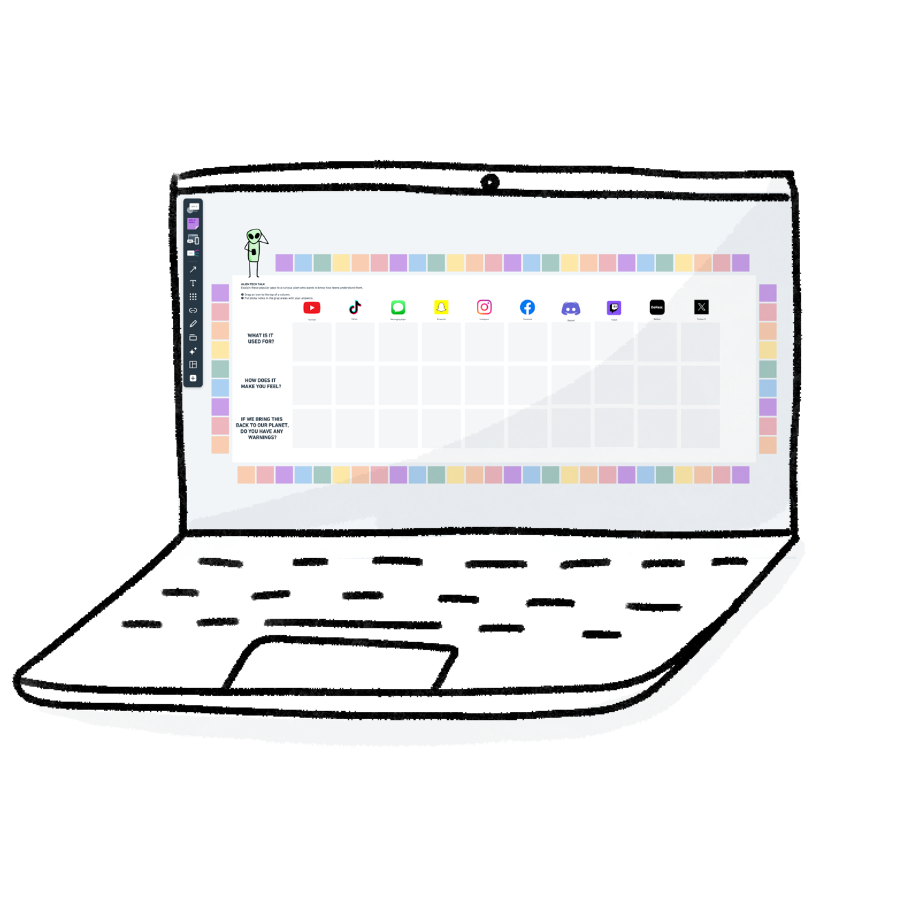

When we conducted the research for the book Behind Their Screens, we collected fascinating survey data about how young people (especially tween girls) navigated sexting pressures. From this data, we started to develop a set of “reasons why teens sext” to bring to our youth advisors. This list included insights based on the survey data – things like “They hope to impress someone they like. They’re seeking praise, validation, or attention from a crush” and “They fear social consequences if they refuse.” Using the digital whiteboard feature in Zoom, we invited our advisors to place a * next to reasons that resonated, an X next to reasons that didn’t feel relevant, and a ? if they weren’t sure or had some wonderings. Then we invited teens to elaborate on their feedback and also to help build out the list with any reasons that felt relevant but were missing from the list. This process — carried out across multiple youth advisory groups — resulted in a teen-validated set of “9 reasons why teens sext (even when they know the risks)” along with qualitative accounts of teens’ experiences and strategies they’ve adopted to manage sexting situations.

Example from HopeLab

We used an emoji reactions activity to have participants in focus groups respond to the main findings of our survey. To do this, we gave teens a bank of emojis to represent reactions (e.g., surprised, unsurprised, skeptical, interested, etc.) to vote on their reactions to survey findings (e.g. “A majority of teens responded X in our survey”). Knowing that conformity and desirability pressures are particularly acute in adolescence, and wanting to minimize them in a group setting, we turned on ‘private mode’ during the voting exercise–a common feature in most collaboration boards– to minimize groupthink. After voting, we discussed similarities and differences in voting patterns.

Later in the focus group, we discussed teens’ hopes and concerns for how these results would be framed, and the audiences with whom they would be shared. We asked questions like ‘ideally what would change as a result of this report’? and ‘who do you think most needs to hear these findings and why?’ From these conversations, we learned that young people were aware that social media was a mixed bag when it came to their well-being, and wanted social media to be portrayed in its full complexity, rather than sugarcoating the potential downsides. At the same time, they were quite concerned about adults’ perception of social media as the source of all mental health problems, and afraid this misunderstanding could lead to bans that would interfere with them accessing important sources of information and support. Teens’ advocacy for ‘educating’ about the harms of social media but not ‘over-regulating or banning access’ was advice that shaped the framing of our report.

Resources, activities, and digging deeper

(See Links Below)

Co-interpretation activities on a virtual whiteboard:

This example from Hopelab and this example from the Center for Digital Thriving both show fun, participatory methods for inviting young people to react to emerging findings.

Qualitative study about involving youth in research

The methods section of this Health Expectations article walks through a detailed example of how a research team worked with young people as both co-researchers and study participants, and describes how to use reflective thematic analysis for synthesis of data.

Involving youth in thematic analysis:

This takes some training and not every project will want to do this. But if you do, this overview of how to conduct thematic analysis is a really straightforward, easy-to-understand resource you can adapt to help train young people in how to do this work, and this exercise from the IES can also be a really useful practice activity!

What to Read Next

Wow, you’re almost at the end of this adventure. You’ve got findings generated in partnership with young people, which have been validated by young people… but how do you make sure the world learns from them? And how do you involve young people in sharing these insights? Turn to chapter 9 to explore this final frontier!